K-means clustering

In unsupervised learning, we now only have features (, where ) and no corresponding labels . Therefore, we are not looking to make predictions. Instead, we are interested in uncovering structure in the feature vectors themselves.

In this lecture, we introduce K-means clustering. As we saw with KNN, sometimes we may suffer from the [curse of dimensionality](./2021-09-02-K-Nearest-Neighbors.md/#Curse of Dimenisionality), but any dataset that we can make predictions on must have “interesting” structures. K-Means will discover the clustered structure of the high dimensional data. (This is a bit different from PCA, which discovers low dimensional structure. PCA transforms high dimensional data points to be desribed by some fewer lower dimensional vectors. K-Means, on the other hand, describes a data point using its relation with each clusters: where describes ’s relation with cluster )

Objective¶

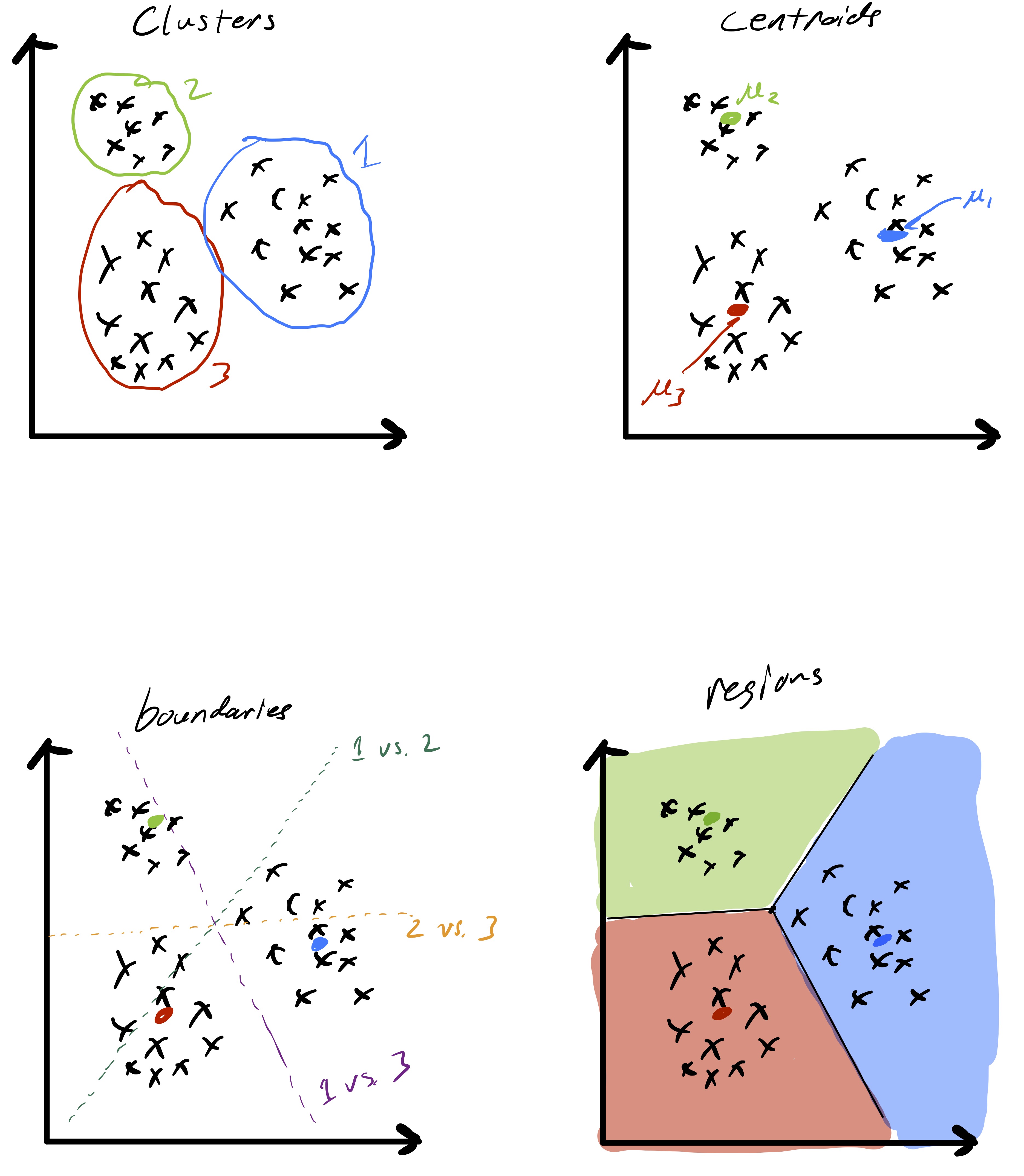

Given a set of data points with , the goal of k-means clustering is to partition the data into groups where each group contains similar objects as defined by their Euclidean distances. Formally, we want to partition the n feature vectors into clusters .

We now define what it means for a clustering to be “good.” We want to find clusters where the data points within each cluster are tightly grouped (i.e., exhibit low variability). Recall , so is the euclidean distance between the vectors and Mathematically, for any cluster assignment we define

The smaller is, the better we consider the clustering. Roughly speaking, we are trying to pick a clustering that minimizes the pairwise squared distances within each cluster (normalized by the cluster sizes).

The definition of can be rewritten in terms of the centroid of each cluster: $$

\mu_{\ell} = \frac{1}{|\mathcal{C}{\ell}|}\sum\limits{i \in \mathcal{C}{\ell}}x{i}, Z_\ell = \sum\limits_{i \in \mathcal{C}{\ell}} \parallel x{i} - \mu_{\ell} \parallel_{2}^{2} $ \mu_{\ell} \ellZ_\ell$ 为各 cluster 中所有点与其对应 centroid 的距离和

Now that we have a formal way to define a good clustering of points, we would like to solve

Unfortunately, solving this equation is not computationally feasible. We cannot do much better then simply trying all possible way partitions of the data and seeing which one yields the smallest objective value, so we have to relax our goal of finding the “best” clustering.

Lloyd’s algorithm for k-means¶

The practical approach we will take finding a good clustering is to use a local search algorithm.

- Local Search: 给一个现有的解决方案, interactively update it to a better and better clustering until it converges

- Converge: Do another local search, if our update result yields the same clusters we already have, we have a converge

This does not ensure convergence to the global solution.

Input: data and

Initialize

While not converged:

- Compute for [ - 每个 feature vector 都对应一个 centroid]

- Update by assigning each data point to the cluster whose centroid it is closest to. [ - 每个 feature vector 都要与 个 centroid 算距离]

- If the cluster assignments didn’t change, we have converged.

Return: cluster assignments

This process actually has a nice feature that the objective function is non-increasing (and only fails to decrease if the cluster assignments have converged). This is like loop invariant - termination.

The cost of Lloyd’s algorithm is per iteration. 注意每次计算(加法或乘法)花费的时间都与 dimension 有关。In practice its efficiency is strongly dependent on how many iterations it takes to converge.

How many clusters and how they are initialized¶

These 2 are the implicit input / super parameters we have to decide for a KNN algorithm.

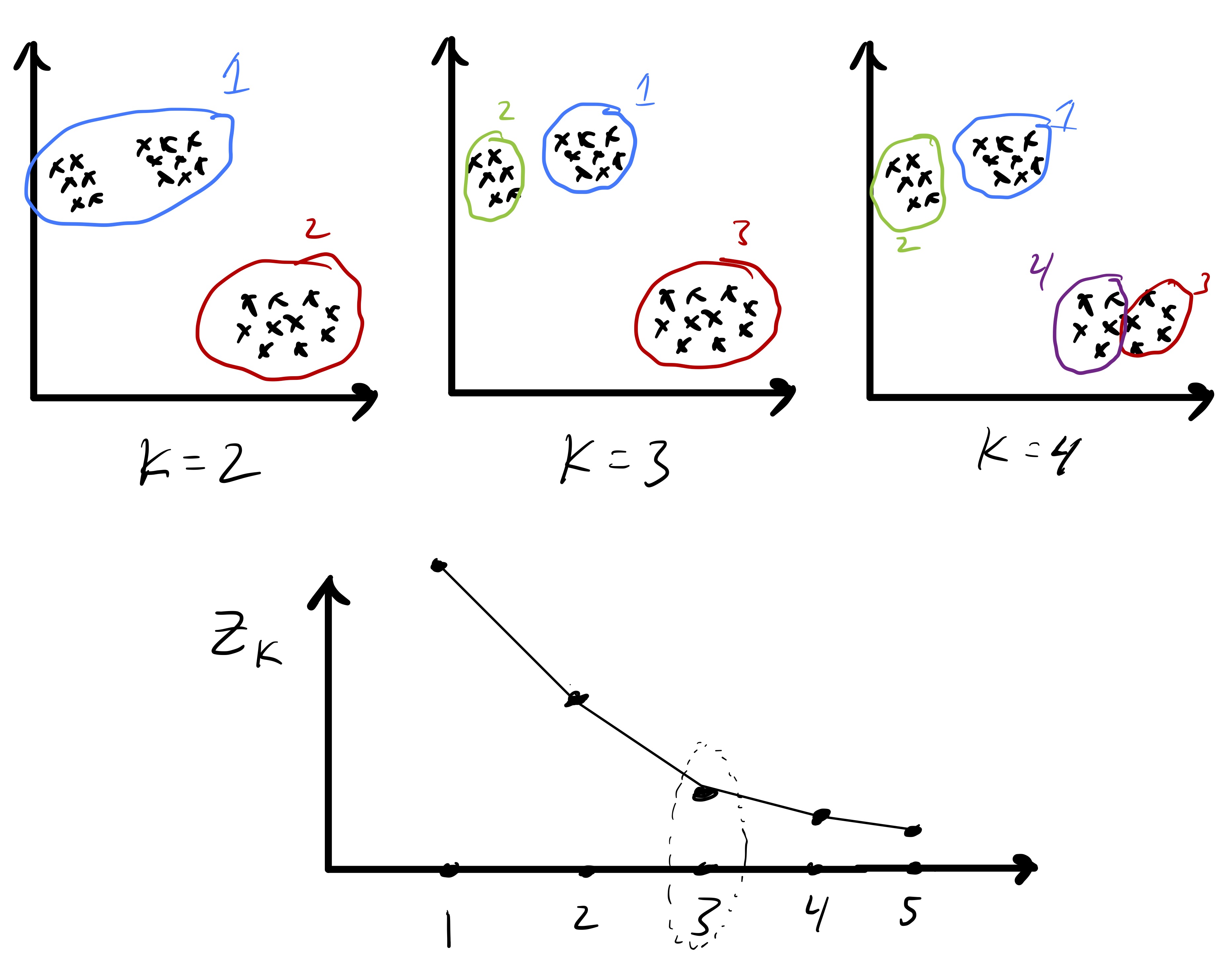

Cluster Number ¶

We want to pick a that minimizes

However, this is problematic because we expect the in-cluster variation to decrease naturally with In fact, if we have exactly clusters, since every point is its own cluster. So, instead, we often look for the point at which stops decreasing quickly.

The intuition is that as if a single big cluster naturally subdivides into two clear clusters we will see that . In contrast, if we see , this might mean we have spitted up a clear cluster that does not naturally subdivide.

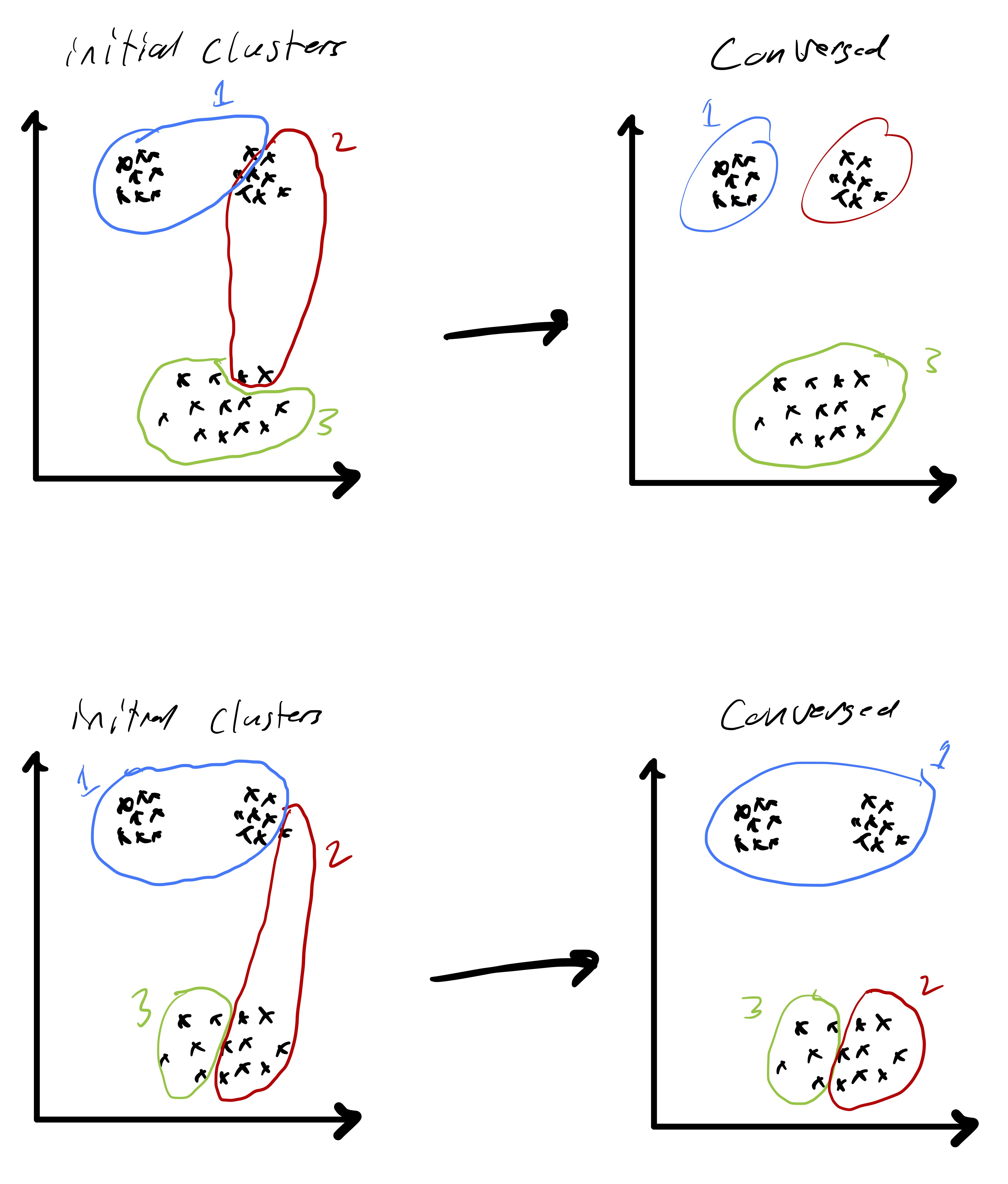

Initial Cluster Assignment¶

Initial cluster assignment This is particularly important because Lloyd’s algorithm only finds a local optima. So, very different initialization could result in convergence to very different solutions.

We can

- Choose several random clusterings and choose the one that gives the best result.

- Choose some random centroids, assign the rest points according to a weighted probability so that the farther they are from a centroid, the more likely they will be assigned to it. This is based on the idea that the cluster centers should be somewhat spread out.

Decision boundaries¶

As said at the very beginning, unsupervised learning algorithm finds underlying structure of data points.

Observe that given two cluster centroids and , the set of points equidistant from the two centroids defined as is a line (就是 和 的中位线). This means that given a set of cluster centroids they implicitly partition space up into domains whose boundaries are linear.